Newsletter Subscribe

Enter your email address below and subscribe to our newsletter

Enter your email address below and subscribe to our newsletter

For decades, the silicon valley roadmap for Artificial Intelligence assumed a linear progression: first sensing, then acting, and finally, reasoning. We expected AI to evolve like we did—crawling through the physical world before mastering the nuances of Shakespeare.

Instead, we witnessed a biological anomaly.

AI is a “premature genius.” It has mastered the 50,000-year-old human art of complex linguistics while failing at the 500-million-year-old biological requirement of spatial awareness. As we navigate 2026, the industry isn’t pushing “forward” anymore; it is retrofitting. We are witnessing a massive, multi-billion dollar effort to “reverse-evolve” AI back into the physical and social realms it skipped.

To understand why current LLMs (Large Language Models) feel both brilliant and “hollow,” we must compare the 4-billion-year biological timeline against the 70-year silicon timeline.

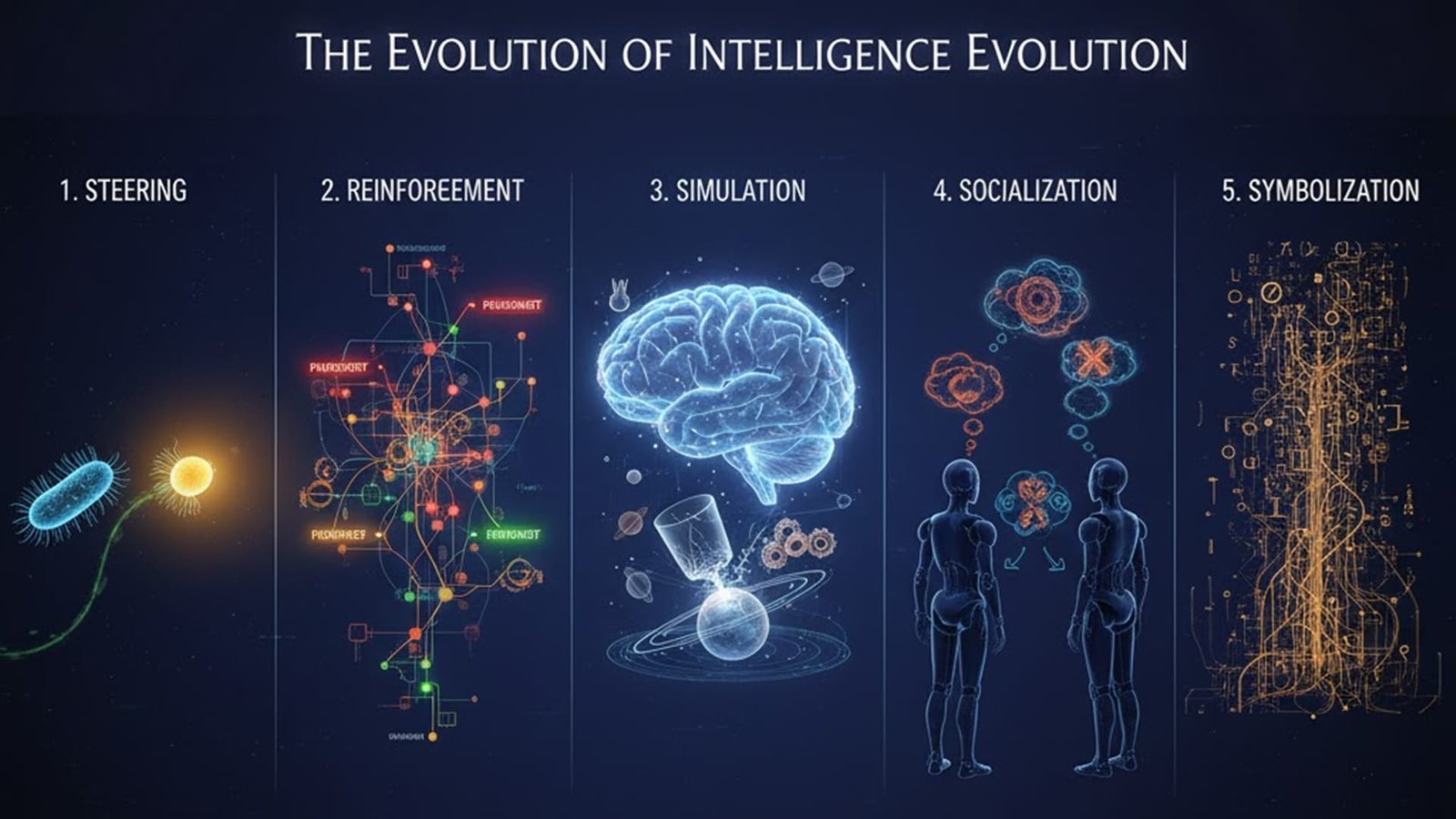

| Evolutionary Stage | Biological Milestone | AI Equivalent | The “Gap” Status |

| 1. Steering | 500M years ago: Basic signal processing (Avoid pain/Seek food). | Basic control logic & IF-THEN statements. | Complete |

| 2. Reinforcement | 300M years ago: The Dopamine system (Learning from rewards). | AlphaGo & RLHF (Reinforcement Learning from Human Feedback). | Complete |

| 3. Simulation | 200M years ago: The Neocortex (Building internal World Models). | Physical World Simulators (Sora, World Models). | Critical Gap |

| 4. Socialization | 15M years ago: Theory of Mind (Understanding intent/Social play). | Multi-Agent Systems (MAS). | Active Frontier |

| 5. Symbolization | 100k years ago: Language (Sharing mental models). | GPT-4o, Claude 3.5, Gemini 1.5. | Overshot |

Humanity’s greatest strength is that our language is an output of our physical experience. When a child says “hot,” they are recalling a sensory simulation of a burnt finger. When an AI says “hot,” it is predicting the most statistically probable character to follow “h-o-t” based on a trillion tokens.

This is the Step 5 Trap. By jumping straight to the “Operating System” of human civilization (Language), AI bypassed the “Hardware Drivers” (Physics and Empathy).

A contemporary AI can describe a glass shattering in poetic detail, yet it lacks an internal “physics engine.” It doesn’t inherently understand gravity, friction, or object permanence. This is why 2025/2026 has seen a pivot toward Spatial Intelligence. Pioneers like Li Fei-Fei are arguing that for AI to be truly “General” (AGI), it must have a “body”—whether robotic or simulated—to understand that the world exists in three dimensions, not just in two-dimensional text strings.

AI mimics tone, but it does not understand intent. In human evolution, the ability to guess what a rival or a partner is thinking was the catalyst for the brain’s rapid expansion.

Current AI “collaboration” is often just a series of API calls. The 2026 “Social AI” movement is focused on Agentic Workflows, where AI must learn to negotiate, compromise, and infer hidden meanings—moving from a “tool” to a “teammate.”

If you want to know where the “Smart Money” is moving, look at the projects “filling the holes.” We are no longer obsessed with adding more parameters to LLMs. Instead, the focus has shifted to:

Expert Insight: “We aren’t building a brain anymore; we are building a nervous system. Language was the easy part. Gravity is the hard part.”

The “Reverse Evolution” of AI is not a setback; it is a stabilization. By backtracking to solve Step 3 (Simulation) and Step 4 (Socialization), the industry is finally giving the “Genius” a “Body.”

For developers and investors, the takeaway is clear: the next unicorn won’t be the one that talks the best. It will be the one that understands the world well enough to move through it, and understands humans well enough to work beside them.

[…] Will AI Go From Chatbots To True AI in 2026? […]